One of the most interesting features Databricks has released recently which is now in public preview is Databricks Apps, a new way to host and share interactive tools directly within the platform.

I’ve had the benefit of testing Databricks Apps since last summer during its private preview. At that time, there was no UI; everything needed to be deployed manually as code. Since then, there have been many quality-of-life improvements, and I feel it’s in a good enough state now to start talking about where Databricks Apps shine, and when you might want to reconsider using them.

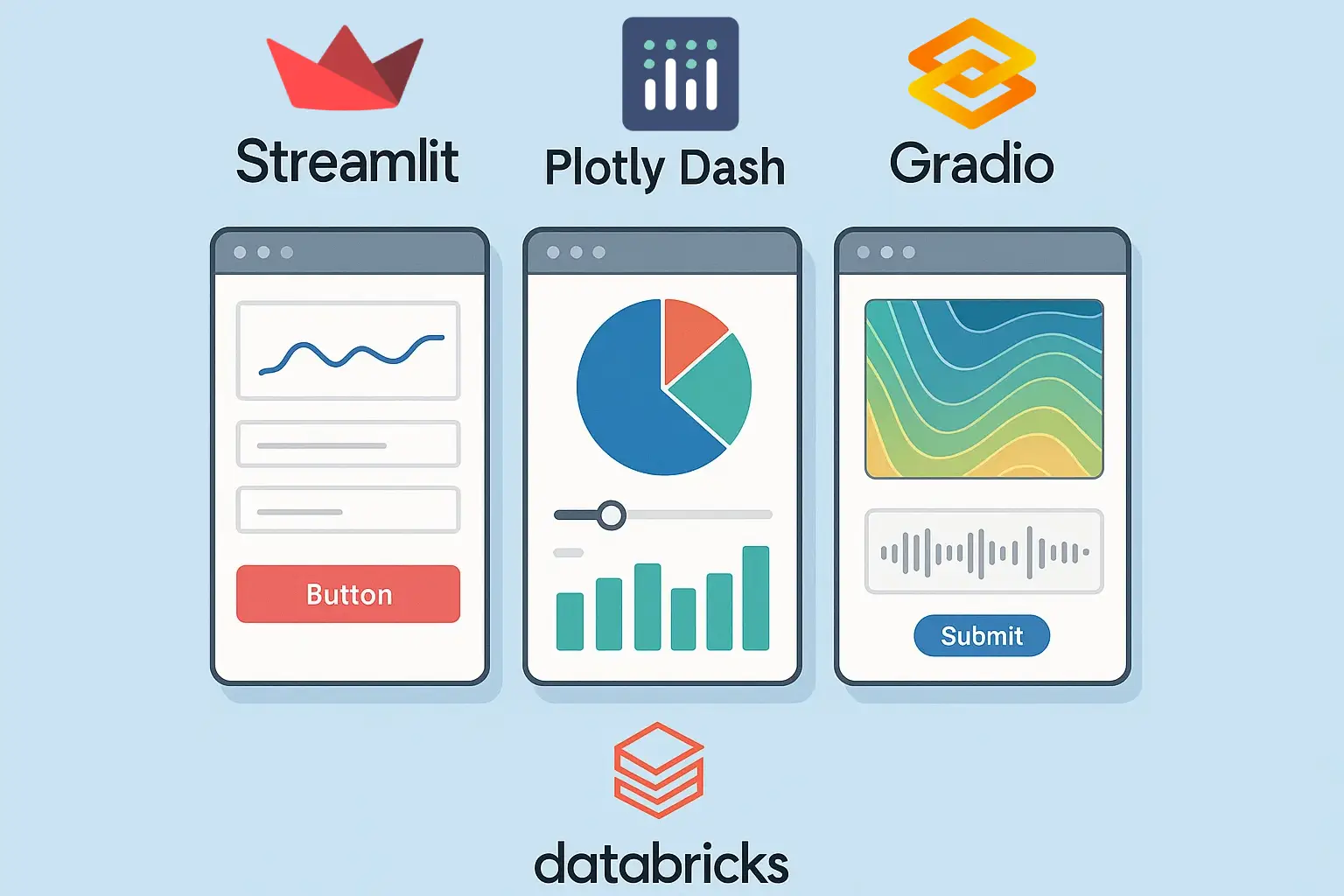

At a high level, Databricks Apps let you build and deploy full-stack applications (ie. dashboards, forms, and workflows) directly from the Databricks workspace, using familiar technologies like Streamlit.

Benefits of Databricks Apps

The benefits of running a Streamlit-style application directly in Databricks include:

- Unified Authentication: Apps automatically inherit Databricks workspace authentication, so you don’t need to manage separate user identities or tokens.

- Tight Integration with Workspace Assets: You can easily reference workspace files, queries, and dashboards without complex configuration.

- Simplified Deployment: Building and deploying an application is fast — no need to set up a separate cloud infrastructure stack just to host a small internal tool.

- Scalability: Apps scale automatically with Databricks infrastructure, handling concurrent users without complex tuning.

- Cost Efficiency for Internal Tools: For internal, low-to-moderate usage apps, Databricks Apps can be much cheaper and faster to maintain compared to spinning up a dedicated app service.

Limitations to Be Aware Of

However, there are a few important limitations:

- File Size Restrictions: Files referenced by an app cannot exceed 10MB in size.

- Limited Compute Environment: Apps run in a restricted compute environment with only a few pre-installed libraries (full list here). Native Spark execution is not available.

- Challenging Data Access: Accessing Unity Catalog data is less seamless than expected. You often need to use the

databricks-sql-connectorlibrary, which has relatively poor performance for backend applications. - No Custom Domains: Perhaps the biggest limitation for me personally — you cannot configure a custom DNS or white-label the app URL. This leads to complex, hard-to-remember URLs, which is a poor user experience for non-technical audiences. I’ve been told custom DNS is not on the roadmap at this time.

When to Use Databricks Apps

Given the strengths and limitations, here are some use cases where Databricks Apps make a lot of sense:

- Internal Tools for Technical Teams: Quick dashboards, workflow runners, or exploration tools for data scientists and engineers.

- Data Validation or QA Apps: Lightweight applications to validate data submissions, check data quality, or monitor pipelines.

- Operational Dashboards: Internal dashboards where speed of deployment matters more than polish or public access.

- Prototypes and Proof-of-Concepts: Quickly testing an idea without worrying about spinning up separate hosting environments.

And here are cases where you may want to reconsider:

- Public-Facing Applications: If you need branded experiences, custom URLs, or public access, a traditional web app hosted elsewhere will likely be a better fit.

- Heavy Backend Processing Needs: Apps aren’t intended to run heavy Spark jobs or ETL workloads natively — they’re more frontend-facing.

- Highly Customized UIs: If you need deep UI/UX customization, Databricks Apps may feel too restrictive compared to building in a full web framework.

If you’re already on the Databricks platform, I encourage you to give the public preview a try — and if you have thoughts or creative use cases, drop me a line at [email protected]! I’m excited to keep exploring this space, and even more excited to see how Apps can help bring data closer to the people who need it most.